Description

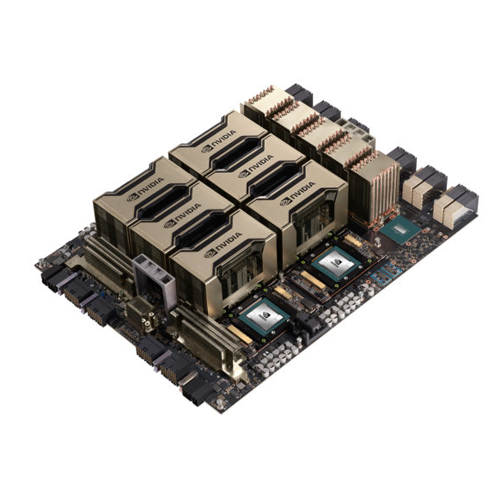

The NVIDIA A100 80GB PCIe is a next-generation data center GPU built on the groundbreaking Ampere architecture, designed to accelerate AI, high-performance computing (HPC), and data analytics workloads at scale. With 80GB of high-bandwidth HBM2 memory and up to 2 TB/s memory bandwidth, the A100 enables faster training, more powerful inference, and massive throughput across the most demanding applications.

Featuring Multi-Instance GPU (MIG) technology, a single A100 GPU can be partitioned into up to seven separate GPU instances, optimizing resource utilization across multiple users or workloads. Whether you’re developing deep learning models, running simulation-based research, or scaling cloud-based services, the A100 PCIe delivers unmatched performance, reliability, and scalability.